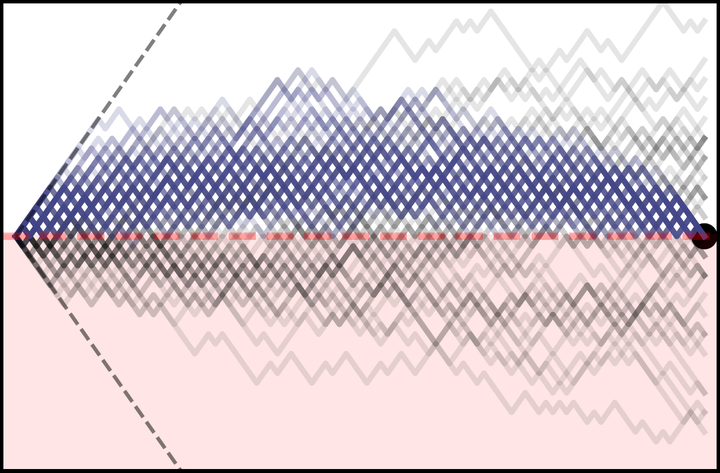

Sample walks generated by the dynamics learnt in trying to efficiently sample random walker excursions.

Sample walks generated by the dynamics learnt in trying to efficiently sample random walker excursions.Abstract

Very often when studying non-equilibrium systems one is interested in analysing dynamical behaviour that occurs with very low probability, so called rare events. In practice, since rare events are by definition atypical, they are often difficult to access in a statistically significant way. What are required are strategies to “make rare events typical” so that they can be generated on demand. Here we present such a general approach to adaptively construct a dynamics that efficiently samples atypical events. We do so by exploiting the methods of reinforcement learning (RL), which refers to the set of machine learning techniques aimed at finding the optimal behaviour to maximise a reward associated with the dynamics. We consider the general perspective of dynamical trajectory ensembles, whereby rare events are described in terms of ensemble reweighting. By minimising the distance between a reweighted ensemble and that of a suitably parametrised controlled dynamics we arrive at a set of methods similar to those of RL to numerically approximate the optimal dynamics that realises the rare behaviour of interest. As simple illustrations we consider in detail the problem of excursions of a random walker, for the case of rare events with a finite time horizon; and the problem of a studying current statistics of a particle hopping in a ring geometry, for the case of an infinite time horizon. We discuss natural extensions of the ideas presented here, including to continuous-time Markov systems, first passage time problems and non-Markovian dynamics.